Why technical accountants make for great context engineers

You're not late - you're right on time

Hey there,

I’m starting the year a bit behind schedule, and I’ve decided that imperfection is the muscle I’m intentionally building this year.

In hindsight, the delay helped. It gave me time to pause and reflect—a prompt I use often, both for myself and for AI. That pause created space to think about what actually makes us effective as subject matter experts.

It isn’t just knowing the rules.

It’s the curation of context.

If you’ve been feeling overwhelmed by AI—by the tools, the hype, the pressure to “keep up”—I want to offer a different frame: You already have the most important skill. You’ve been doing it for years.

Let me show you what I mean.

This newsletter is brought to you for free by:

Simplify Complex Revenue Recognition Automate revenue recognition, gain real-time insights, and ensure ASC 606 / IFRS 15 compliance—all while closing books faster

A Note on Community

Over the past year, I’ve been fortunate to build a community of 600+ technical accountants through Gaapsavvy. What I’ve learned from hosting shareforums and knowledge sessions is that we all have something unique to contribute.

Some people are building automation tools. Others are vibe coding reconciliations. Some are diving deep into technical guidance interpretations.

This article shares what I’ve been learning: context engineering for technical accounting. It’s not the only approach to AI, and it’s not right for every situation. But it’s what I’ve been figuring out, and I’m sharing it because that’s what we do in community—we learn, we share, we help each other navigate complexity.

If this resonates with you, read on. If your focus is elsewhere (automation, operational accounting, tool-building), that’s valuable too. We need all of it.

📋 In this article, you’ll learn:

Why context engineering matters for technical accounting (not just generic AI tips)

How AI actually processes information—and why most prompts fail

The 8-step framework I use to set up persistent context for revenue recognition, equity, and leases

Real examples from pricing analysis to organizational workflows

The Skill You Didn’t Know You Had

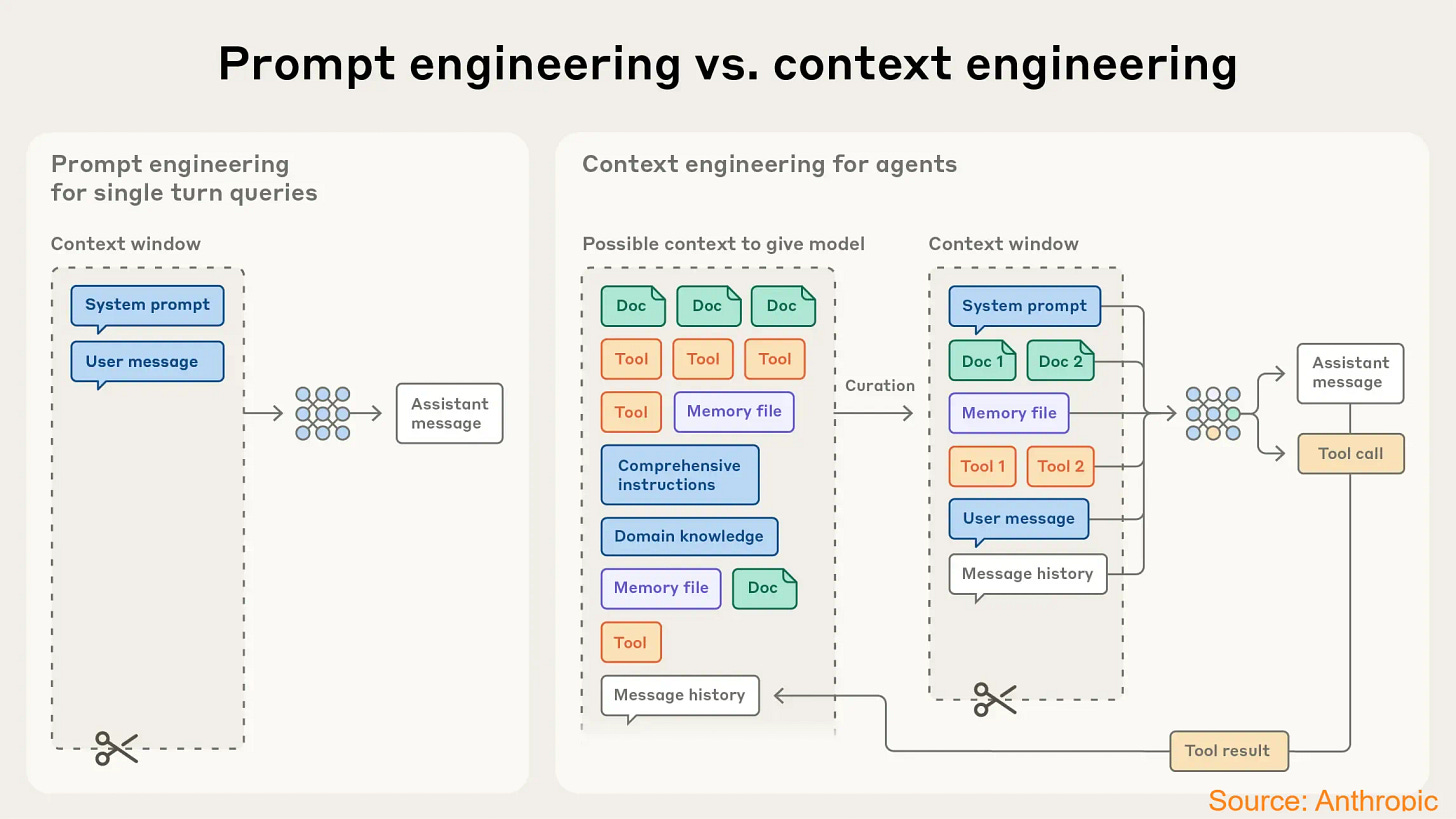

Last year I came across an article from Anthropic that described a shift from prompting to Context engineering.

“Context engineering is the art and science of curating what will go into the limited context window from that constantly evolving universe of possible information. In contrast to the discrete task of writing a prompt, context engineering is iterative and the curation phase happens each time we decide what to pass to the model.”

When I saw this, it made me smile. Because context engineering is exactly what we do when we write technical accounting memos.

Think about it: When you’re researching a complex revenue recognition question, you don’t dump ASC 606 into a memo. You:

Identify the core issue and what questions need answering—then analogize to questions in interpretive guides and understand how each answer affects the next

Pattern match against prior transactions you’ve seen—digging into past experiences stored in your memory

Pull in only the relevant guidance from hundreds of pages of literature to build a position

Reference prior conclusions and precedents—benchmarking to SEC filings, inquiries, and industry peers

Test your logic iteratively with managers and auditors, arguing the position from different perspectives

Explain why something matters—documenting your reasoning, refining questions, and iterating until the logic holds

That’s context engineering. You’re taking a massive universe of information and curating the smallest possible set of high-signal inputs that lead to a decision.

The difference now? You’re not just engineering context for other humans. You’re engineering it for machines too.

Why AI Fails (And Why Reasoning Models Don't Fix Everything)

Here’s what I’ve observed: AI models don’t fail because they lack capability. They fail because their attention mechanism gets overwhelmed.

Attention is how AI models decide what to focus on. Think of it like highlighting important sentences while reading—except the AI does this mathematically, comparing every word to every other word to figure out what’s relevant. As context grows, this “attention budget” gets stretched increasingly thin. It’s like trying to highlight an entire textbook—eventually, nothing stands out.

This creates something called context rot—a documented phenomenon where AI performance degrades as input length increases, even on simple tasks. Too much information—irrelevant examples, conflicting guidance, tangential details—causes the model to lose signal in the noise.

It’s the same reason a junior accountant will copy-paste an entire interpretive guide into a memo instead of pulling the three relevant paragraphs. It’s the same reason a bad technical memo buries the conclusion under pages of background.

To get better outcomes, you don’t just add more data. You curate the smallest possible set of high-signal inputs.

The accountants who understand this instinctively—who know how to curate signal from noise—are going to be the ones who make AI actually useful.

How AI Actually Processes Information

Here's the technical reality most people don't understand:

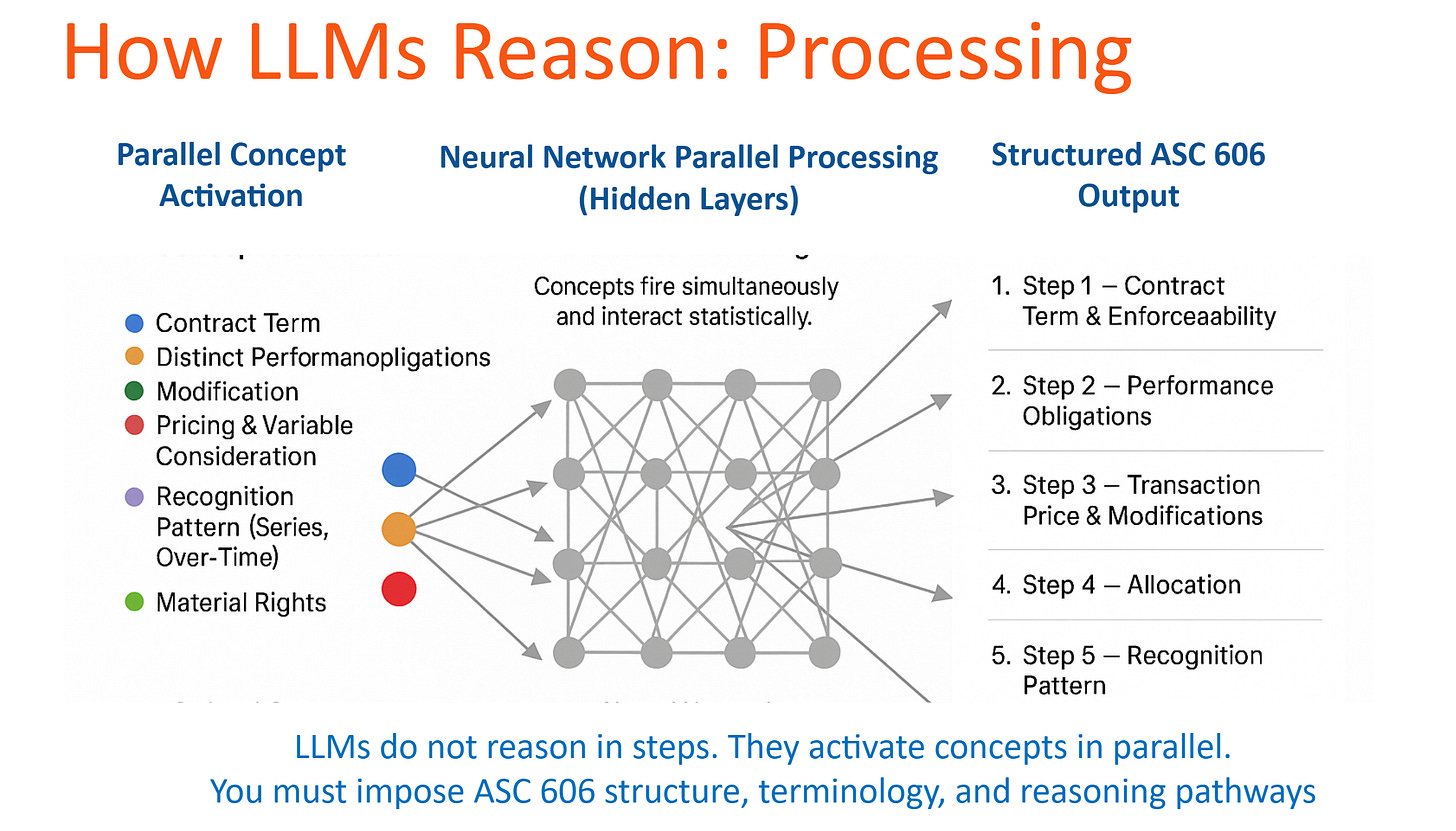

LLMs activate concepts in parallel through their neural networks—just like expert accountants do. The concepts on the left (Contract Terms, Performance Obligations, Pricing, etc.) all fire simultaneously and interact statistically through the hidden layers in the middle.

But here’s the critical part: LLMs do not reason in steps by default. They activate concepts in parallel.

The five-step structure on the right? That’s the output format—how ASC 606 tells you to document your conclusion for GAAP compliance and audit trails.

In this way, ASC 606 is an output schema, not a thinking schema. It’s optimized for reporting and auditability, not for reasoning.

This is why naive prompts often fail. When you ask AI to “help with revenue recognition,” it often gives you generic, step-by-step guidance—because it’s following the structure of the documentation, not the structure of expert thinking.

Even reasoning models need to know:

Which domain to reason in (technical accounting, not general business analysis)

Which concepts to activate (ASC 606 specific, not generic revenue principles)

What quality bar to meet (Big 4 audit standards, not internet blog posts)

Context engineering is how you aim that reasoning power at the right problems.

The 8-Step Framework I’ve Been Testing

So how do you actually apply this? How do you translate understanding parallel concept activation into something practical?

Over the past year, I’ve been experimenting with different approaches to context engineering for technical accounting. What I’ve landed on is an 8-step framework that seems to mirror how seasoned accountants actually think:

1. Concept Discovery → What core ASC 606 concepts are triggered?

2. Vocabulary Extraction → What specific terms and definitions matter?

3. Classification Debate → What are the competing interpretations? Where’s the ambiguity?

4. ASC Step-Mapping → How do these concepts map to the five-step model?

5. Step Interaction → How do multiple steps interact? (e.g., Step 2 affects Step 5)

6. Big 4 Stitching → What interpretive guidance from the firms applies?

7. References → What’s the authoritative source for each conclusion?

8. Conclusion/Risks/Disclosure → What’s the judgment call? What needs disclosure?

This framework isn’t teaching AI how to reason—it’s helping me specify what to reason about and what good looks like.

When I embed this framework into ChatGPT’s memory or a Claude Project, I’ve found the quality of analysis improves significantly.

Let me show you what I mean.

What This Looks Like in Practice

I uploaded a screenshot of a typical SaaS pricing page to ChatGPT—nothing more. Just a typical seat-based pricing model with different ‘good-better-best’ tiers, billing options, and AI credits.

The framework was embedded in ChatGPT’s memory. Here’s what happened:

The AI immediately identified the hidden complexity:

Most people would look at this and think “SaaS revenue, recognize over time, done.” But the AI caught something way more nuanced:

“Are AI credits that reset monthly variable consideration (Step 3), material rights (Step 2), or merely incidental benefits? This distinction matters—if credits are substantive, revenue must be constrained or allocated separately.”

Then it flagged one of the most hotly debated questions in SaaS accounting policy: Is usage-based pricing variable consideration under ASC 606, or options could be considered material rights?

This isn’t academic. The accounting treatment differs dramatically:

Variable consideration → Estimated and recognized ratably with the appropriate constraint

Optional purchase → Not part of the contract and therefore recognized as consumed, with any material right allocated to that option and recognized when exercised

It walked through all 8 steps systematically:

Concept Discovery: Identified seat-based licensing, usage credits, tiered bundles, annual billing as triggers

Vocabulary Extraction: Mapped “unlimited files” and “AI credits/month” to ASC 606 terms (stand-ready obligation, variable consideration, non-cash benefits)

Classification Debate: Questioned whether Dev Mode is “merely incremental” or materially different (requiring separate performance obligations)

Step Interaction: Noted that Step 2 conclusions (are credits distinct?) directly impact Step 4 (SSP allocation methodology)

Big 4 Stitching: Anticipated audit focus—performance obligation memo, SSP methodology, credit breakage assumptions

References: Cited ASC 606-10-25-14 (distinctness), 606-10-55-42 (material rights), 606-10-25-31 (stand-ready)

Risks/Disclosure: Under-identifying performance obligations, Improper allocation of transaction price, Incorrect treatment of upgrades/modifications, Enterprise contracts bypassing standard rev rec logic

TL;DR (Controller-Level Take) “This looks like simple SaaS pricing — but from an ASC 606 perspective, seat differentiation + AI credits + enterprise customization create real complexity. The biggest risk isn’t timing — it’s performance obligation identification and allocation.

Without the framework:

“This is SaaS revenue recognized over time”

Generic five-step walkthrough

Maybe mentions variable consideration

No specific ASC citations

With the framework:

Identifies the Step 2 vs. Step 3 classification debate

Recognizes this as contested policy territory

Cites specific ASC sections

Anticipates audit focus areas

This is the difference between textbook analysis and expert reasoning.

The framework didn’t make the AI smarter. It made the AI think like a seasoned technical accountant who’s written dozens of these memos and knows where the judgment calls actually are.

Being an expert technical accountant is not about having perfect answers. It’s about asking the right questions. Expert level isn’t perfection—it’s knowing where the edges are.

That’s what the framework taught the AI to do. And that’s expert-level work.

How the Framework Came to Be

I didn’t set out to create a framework. I was preparing to teach a technical accounting research workshop for a revenue team that ranged from staff to director level.

The challenge: Reasoning models are incredibly good at nuance—but most people still get generic answers when they try to use AI for technical accounting. Why?

The gap wasn’t the model’s capability. The gap was context engineering.

When I use AI now, I barely think about prompts—the models “just get it.” But that’s because I’ve already done the work: ChatGPT has my 8-step framework in memory, Claude Projects have interpretive guidance pre-loaded, I ask domain-specific questions, and I can evaluate when output is right.

Beginners skip this setup and wonder why “help me with revenue recognition” returns textbook answers instead of expert analysis.

So I mapped out how I actually think when writing technical memos—not how ASC 606 tells me to document, but how I reason through problems. The insight: Beginners think sequentially (Step 1 → 2 → 3), but seasoned technical accountants think in parallel—activating multiple concepts simultaneously, just like AI does.

I used ChatGPT as a thinking partner to structure this into 8 steps. When embedded it into workflows, the research quality jumped immediately—not from better prompts, but from better context. It was having domain expertise, asking good questions, and iterating rapidly until the framework matched how I actually think - and using AI to externalize my expert thinking, not to replace it.

The framework doesn’t teach AI how to reason. It teaches you how to set up persistent context so AI reasons like a technical accountant by default.

The Memory Breakthrough

I recently sat down with Devon Coombs to compare the AI tools we actually rely on day-to-day. The insight? We’re building places where context can accumulate.

This is where persistent memory becomes the game-changer.

ChatGPT has had cross-conversation memory since early 2024—almost two years of personalization. If you’ve noticed ChatGPT “just getting you” over time, that’s why. It remembers your preferences, projects, and thinking patterns across separate chats. The 8-step framework lives in its memory—so every conversation starts with that foundation already loaded. Context engineering on autopilot.

Claude added persistent memory in fall 2025. If you’re a long-time Claude user who suddenly noticed it started remembering your work patterns and preferences, that’s the shift. It excels at deep reasoning and technical research, now with context that persists across conversations. Within a single chat, it still holds the most context (up to 200K tokens), making it powerful for complex analysis.

Gemini launched memory in August 2025, with massive context windows (1-2M tokens) and multimodal capabilities. The newest to the memory game, but with unmatched breadth for processing large amounts of diverse content.

What this means: Memory isn’t just convenience—it’s compound expertise. Every conversation builds on the last. The framework you embed once becomes the default thinking pattern. This is why some tools start feeling “smarter” over time—they’re not getting smarter, they’re getting to know you.

🎤 Devon and I go deeper on how we actually use these tools—and why memory is the unlock—in our latest podcast: “The AI Tools We Actually Use in 2026”.

You’re Right on Time

The reasoning models are getting better. The tools will keep changing. But the underlying skill—context engineering—doesn’t expire.

Reasoning models can think deeply. Your job is to tell them what to think about, where to focus, and what good looks like.

Understanding how to specify domain, set quality standards, and curate context—these are the skills that make you valuable regardless of which model is trending.

You’re not learning ChatGPT. You’re learning how to interface between expert knowledge and AI reasoning.

That skill compounds.

If you’ve been feeling behind, or overwhelmed, or like you “should” understand AI better by now—I want you to know: You’re not late. You’re right on time.

The skill that matters most—you already have it. Now we’re just learning to use it in a new environment.

What’s Next

If you want to explore these ideas further, I’m hosting a few upcoming sessions:

Friday, Jan 30 9am pacific: AI Reporting Knowledge Share (Virtual - Gaapsavvy community members only - Register here)

Tuesday, Feb 25 2:30-6:30: AI Finance Lab with Coterie CFO <> Gaapsavvy collab - SF at PwC (50 CFO/CAO teams, hands-on AI play time + sponsor demos) - (In person - apply to Join here)

And I’m working on something more comprehensive.

Last year, I started collecting interest for an AI Mastermind cohort. Thank you to everyone who signed up—I haven’t forgotten you, and I appreciate your patience.

Since then, I’ve been collaborating with Devon (my podcast co-host and former Google Cloud Marketplace Controller) on building a program that combines hands-on context engineering, workflow automation, and strategic AI fluency—designed specifically for technical accountants and finance leaders.

We’re finalizing details now and will share more in February. If you’re on the original waitlist, you’ll be the first to know.

Thanks for reading. If you’ve been experimenting with AI—whether it worked or failed—I’d love to hear what you’re learning. Just hit reply.

Angela

Disclaimer: This newsletter is for educational purposes only and based on lived field experience. Please consult your audit teams and internal controls before applying anything directly.

This is genius- for AI and for life- yessssss! You are right on time!

The parallel between writing technical accounting memos and context engineering for AI is spot-on. That shift from viewing AI failures as capability issues to recognizing them as attention budget problems changes everything. I've run into context rot plenty of times without having a name for it. The 8-step framework feels like it captures how expert accountants actualy think - not sequentially through ASC steps, but activating multiple concepts at once. The SaaS pricing example showing Step 2 vs Step 3 classifcation debates was particularly good.